An Introduction to gRPC: Building Distributed Systems With Efficiency and Scalability in Mind

A distributed system comprises independent computers with components spread across a network; each computer in the network, referred to as a node, possesses processing power, memory, and storage. Nodes communicate by relaying messages, and the system benefits from scalability, fault tolerance, and performance. Workload distribution across nodes allows distributed systems to handle more load than individual computers.

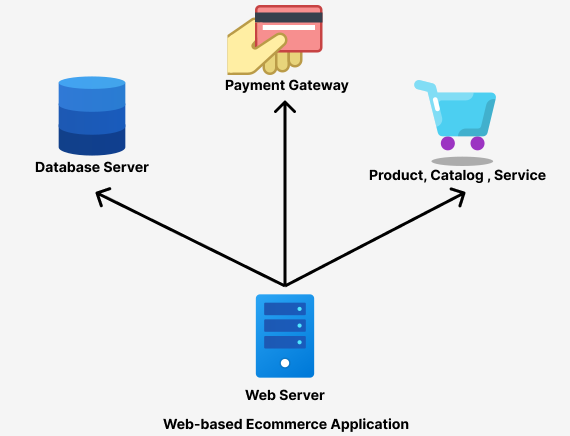

An example of a distributed system is an e-commerce site that consists of several components, such as a web server, a database server, a payment gateway, and a product catalog service.

As data generation increases, traditional monolith systems become a single point of failure, which may become prohibitively expensive to vertically scale. Organizations, therefore, need efficient and scalable distributed solutions to break down their systems into smaller, more manageable services that can be distributed across multiple servers. This approach enables them to scale their applications horizontally by adding more servers as needed to handle traffic and data volumes.

What is gRPC?

Google Remote Procedure Calls(gRPC), an open-source framework, facilitates the development of high-performance, scalable, and efficient distributed systems. Developed by Google in 2015, gRPC efficiently connects systems, written in different languages, using a pluggable architecture that supports load balancing, tracing, health checking, and authentication.

To comprehend gRPC, we must first understand Remote Procedure Calls (RPC).

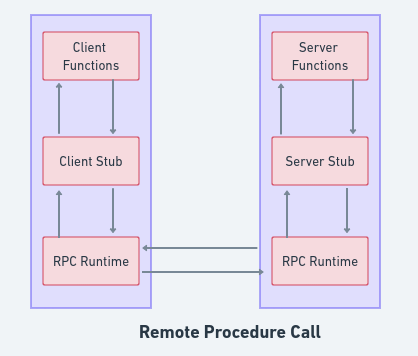

RPC enables two distinct computer programs to communicate with each other over a network. In this communication model, one program requests a service or procedure from another program, which processes the request and sends the results back to the first program. RPC follows the client-server model, where the requesting program acts as the client and the service-providing program operates as the server.

To visualize RPC, imagine making a phone call to request a service from another person, where both parties have a conversation to complete the request. Likewise, in RPC, the two programs exchange messages to accomplish the requested service or procedure.

The process flow of a remote procedure call request consists of the following steps:

- First, the client stub is invoked by the client.

- Next, the client stub performs a system call to send a message to the server and includes the necessary parameters in the message.

- Afterward, the message is transmitted from the client to the server using the client’s operating system.

- Upon receipt, the server’s operating system delivers the message to the server stub.

- The server stub then extracts the parameters from the message.

- Finally, the server stub invokes the server procedure to process the request.

How does gRPC work?

gRPC utilizes Remote Procedure Call (RPC) technology to facilitate communication between disparate services using a protocol buffer. The protocol buffer serves as a conduit for structured data exchange between systems.

When a client makes a request to a server using a stub, which acts as a client-side representation of the server-side service, the request is transmitted as a serialized protocol buffer message, containing the necessary data for the request.

On the server side, the message is then deserialized, and the relevant service method is invoked to process the request. The server subsequently sends the response back to the client as another serialized protocol buffer message.

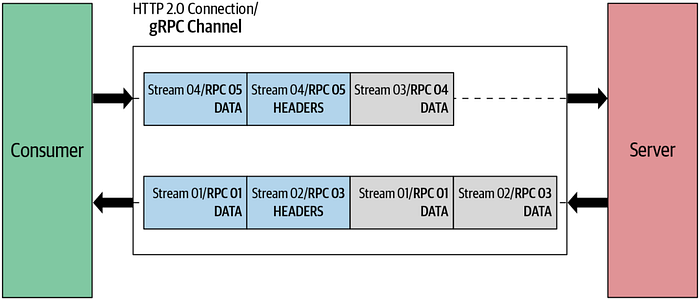

gRPC also uses HTTP/2 as its transport protocol. HTTP/2 provides a way for a client and server to communicate through a single TCP connection. This connection can contain multiple streams of bytes flowing bidirectionally. In the context of gRPC, a stream in HTTP/2 maps to one RPC call. When data is passed between the client and server, it is divided into multiple frames, with each frame assigned a stream ID. This allows multiple messages to be transmitted over a single connection and enables message multiplexing.

gRPC API service methods

Functionalities that can be remotely called by clients in gRPC API are defined by service methods. These methods are specified in an Interface Definition Language (IDL) file written in Protocol Buffer format (.proto). This IDL file is utilized to create the server and client code for gRPC.

They are four types of services methods and they include:

- Unary RPC: this is a straightforward communication method where the client sends a request to the server and awaits a response. It’s useful when a prompt response is needed.

- Server Streaming RPC: this involves the client sending a request to the server and the server responds with a stream of messages. The client reads the messages as they arrive, and it’s beneficial when the server has a large amount of data to transmit to the client.

- Client Streaming RPC: this is a method where the client transmits a stream of messages to the server, and the server responds with a single response. The server processes the message stream as it arrives and sends a response to the client when it’s done. This method is useful when the client has a lot of data to transmit to the server.

- Bidirectional Streaming: this RPC involves both the client and the server sending and receiving streams of messages. It’s useful when both parties need to exchange large amounts of data, and the message order isn’t crucial.

Advantages of gRPC

gRPC stands out among alternative communication technologies, such as RESTful APIs and SOAP-based web services, due to the following advantages:

- gRPC is a language-agnostic communication technology that offers various benefits to developers. First, it supports many programming languages, including C++, Java, Python, Go, Ruby, and more, allowing developers to choose the language they are most comfortable with.

- gRPC is highly performant due to its binary serialization and compressed data formats, which results in faster data transfer. Additionally, it uses HTTP/2 to enable multiplexing, header compression, and server push, which further improves efficiency.

- gRPC provides code generation capabilities that use Protocol Buffers to define the service and message interfaces, allowing developers to easily maintain and update the codebase across different languages.

- gRPC offers strongly typed contracts that use Protocol Buffers to define the service contract. This ensures that the data being transmitted is consistent and validated, which reduces the chance of errors and bugs.

- gRPC supports bi-directional streaming, allowing both the client and server to send and receive data simultaneously, which enables real-time communication and reduces latency.

- gRPC is highly scalable and can handle large volumes of traffic. It uses load balancing and service discovery, allowing multiple instances of the same service to be deployed, which provides fault tolerance and high availability.

Building a gRPC server and client in Node.js

Firstly, we need to create a **.proto**file that defines the service methods and data structures. Let’s create a file called greeting.proto where we define our services.

syntax = "proto3";package greeting;service GreetingService {

rpc SayHello (HelloRequest) returns (HelloResponse) {}

}message HelloRequest {

string name = 1;

}message HelloResponse {

string message = 1;

}

This file defines a GreetingService with a single SayHello method that takes a HelloRequest message and returns a HelloResponse message.

Next, we need to install some dependencies which include:

- @grpc/grpc-js

- @grpc/proto-loaderYou can install them as shown below:

npm i @grpc/grpc-js

npm i @grpc/proto-loader

npm i grpc-toolsCreate a file called server.js to implement the server side of the code. This code creates a gRPC server that listens on port 50051 and implements the sayHellomethod. This method takes a call object with a request property that contains a name field, and a callback function that sends a message field back to the client.

const grpc = require('@grpc/grpc-js');

const protoLoader = require('@grpc/proto-loader');const PROTO_PATH = __dirname + '/greeting.proto';const packageDefinition = protoLoader.loadSync(PROTO_PATH);

const greetingProto = grpc.loadPackageDefinition(packageDefinition).greeting;function main() {

const server = new grpc.Server();

server.addService(greetingProto.GreetingService.service, {

sayHello: (_, callback) => {

callback(null, { message: 'Hello World!' });

}, });

server.bindAsync('127.0.0.1:50051', grpc.ServerCredentials.createInsecure(),

(error, port) => {

if (error) {

console.error(error);

return;

}

console.log(`Server started on port ${port}`);

server.start();

}

);

}

main();

This code creates a gRPC client that sends a **sayHello** request to the server with a message field of **HelloWorld!**. When the response is received, the client logs the message field to the console.

const grpc = require('@grpc/grpc-js');

const protoLoader = require('@grpc/proto-loader');const PROTO_PATH = __dirname + '/greeting.proto';const packageDefinition = protoLoader.loadSync(PROTO_PATH);

const greetingProto = grpc.loadPackageDefinition(packageDefinition).greeting;const client = new greetingProto.GreetingService('localhost:50051', grpc.credentials.createInsecure());

client.sayHello({}, (error, response) => {

if (error) {

console.error(error);

} else {

console.log(response.message);

}

});

Run the server and client in separate terminal windows by running node server.js and node client.js respectively. You should see the message ‘HelloWorld!’ logged to the console by the client.

gRPC vs HTTP/REST

Globally, two communication protocols are popular for building distributed systems, gRPC and HTTP/REST. Below is a comparison of their performance, scalability, and features.

Performance: gRPC is generally faster than HTTP/REST due to its binary format, which reduces the payload size, and the speed of serialization and deserialization. HTTP/2 protocol used by gRPC enables multiplexing, pipelining, and server push, thus making it more efficient than the HTTP/1.1 protocol used by REST APIs. Protocol buffers are used by gRPC, which are smaller, faster, and more efficient than JSON used by REST APIs.

Scalability: gRPC is highly scalable due to its use of HTTP/2 and its ability to handle multiple requests and responses concurrently. gRPC supports load balancing and service discovery natively, making it easy to scale applications. REST APIs can also be scaled, but they require additional infrastructure and configurations.

Features: gRPC offers several features that REST APIs do not, including bidirectional streaming, flow control, error handling, and support for multiple programming languages. Additionally, gRPC generates client and server stubs automatically from a single proto file, reducing the amount of code developers need to write. REST APIs are simpler to implement and can be accessed using any HTTP client, making them more flexible than gRPC in certain scenarios.

Conclusion

gRPC is an efficient and scalable framework for building distributed systems that offers many benefits to developers. It supports multiple programming languages and is highly performant due to its binary serialization and compressed data formats. Additionally, it offers built-in features for load balancing and authentication and supports bi-directional streaming. While it may not be as flexible as REST APIs, it excels in scenarios such as microservices architecture, real-time applications, high-performance networking, and cross-language interoperability. By leveraging these advanced features, developers can build efficient and robust distributed systems tailored to specific needs.

I hope you found this article useful. Thank you!!!

Originally published at https://semaphoreci.com on July 19, 2023.